k8s集群版本升级

环境集群版本:kubernetes v1.15.3部署方式:kubeadm操作系统版本: CentOS Linux release 7.6.1810 (Core)集群节点:ops-k8s-m1、ops-k8

环境

集群版本:kubernetes v1.15.3

部署方式:kubeadm

操作系统版本: CentOS Linux release 7.6.1810 (Core)

集群节点:ops-k8s-m1、ops-k8s-m2、ops-k8s-m3 共3个master

环境准备

配置yum安装源

cat >/etc/yum.repos.d/k8s.repo << EOFn[kubernetes]nname=Kubernetesnbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64nenabled=1ngpgcheck=0nrepo_gpgcheck=0ngpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgnnEOF

修改集群初始化时的下载镜像地址 默认是http://k8s.gcr.io,升级下载镜像会用到,避免因为网络原因拉镜像失败 修改命令

kubectl edit cm kubeadm-config -n kube-systemnn# 找到imageRepository把对应地址改为阿里云的镜像仓库地址:: registry.aliyuncs.com/google_containers并保存,下面只贴出相关的部分配置nimageRepository: registry.aliyuncs.com/google_containersnkind: ClusterConfigurationnkubernetesVersion: v1.15.3

如何找到稳定版的kubernetes

可以使用下面命令列出kubeadm 、kubectl、kubelet的所有版本,每个大版本的最后一个版本为稳定版

yum list --showduplicates kubeadm kubectl kubelet --disableexcludes=kubernetes

升级kubernetes

发现不能从kubernetes v1.15.3直接升级到kubernetes v1.19.16会报错,版本跨越太大,不能直接升级

[root@ops-k8s-m1 k8s]# kubeadm upgrade plann[upgrade/config] Making sure the configuration is correct:n[upgrade/config] Reading configuration from the cluster...n[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'n[upgrade/config] FATAL: this version of kubeadm only supports deploying clusters with the control plane version >= 1.18.0. Current version: v1.15.3nTo see the stack trace of this error execute with --v=5 or higher

于是,想先升级到1.15.x系列的稳定版本,找到了是v1.15.12

升级kubernetes v1.15.3 到 kubernetes v1.15.12

查看当前集群

[root@ops-k8s-m1 k8s]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 344d v1.15.3nops-k8s-m2 Ready master 344d v1.15.3nops-k8s-m3 Ready master 344d v1.15.3

我这边先升级ops-k8s-m1,升级之前先做好备份工作,如果是虚拟机最好做个快照

cp -ar /etc/kubernetes /etc/kubernetes.bakncp -ar /var/lib/etcd /etc/var/lib/etcd.bak

驱逐pod设置成不可调度

kubectl drain ops-k8s-m1 --ignore-daemonsets --force

升级kubeadm,kubelet、kubectl程序

yum install -y kubeadm-1.15.12 kubelet-1.15.12 kubectl-1.15.12 --disableexcludes=kubernetes

查看更新计划

kubeadm upgrade plan

升级

kubeadm upgrade apply v1.15.12nnn# 升级过程n[root@ops-k8s-m1 k8s]# kubeadm upgrade apply v1.15.12n[upgrade/config] Making sure the configuration is correct:n[upgrade/config] Reading configuration from the cluster...n[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'n[preflight] Running pre-flight checks.n[upgrade] Making sure the cluster is healthy:n[upgrade/version] You have chosen to change the cluster version to "vv1.15.12"n[upgrade/versions] Cluster version: v1.15.3n[upgrade/versions] kubeadm version: v1.15.12n[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: yn[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]n[upgrade/prepull] Prepulling image for component etcd.n[upgrade/prepull] Prepulling image for component kube-apiserver.n[upgrade/prepull] Prepulling image for component kube-controller-manager.n[upgrade/prepull] Prepulling image for component kube-scheduler.n[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcdn[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-schedulern[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-managern[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiservern[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcdn[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-schedulern....n[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentialsn[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Tokenn[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the clustern[addons]: Migrating CoreDNS Corefilen[addons] Applied essential addon: CoreDNSn[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane addressn[addons] Applied essential addon: kube-proxynn[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.15.12". Enjoy!

重启kubelet

systemctl daemon-reloadnsystemctl restart kubelet

再次查看集群版本

[root@ops-k8s-m1 k8s]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 15m v1.15.12nops-k8s-m2 Ready master 344d v1.15.3nops-k8s-m3 Ready master 344d v1.15.3

接下来安装统一的方法再升级另外两个master,我这边省略步骤,升级完成再查看集群版本

[root@ops-k8s-m1 k8s]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 15m v1.15.12nops-k8s-m2 Ready master 30m v1.15.12nops-k8s-m3 Ready master 30m v1.15.12

升级kubernetes v1.15.12 到 kubernetes v1.16.15

升级命令

yum install -y kubeadm-v1.16.15 kubelet-v1.16.15 kubectl-v1.16.15 --disableexcludes=kubernetesnnkubeadm upgrade plannnkubeadm upgrade apply v1.16.15nnsystemctl daemon-reloadnnsystemctl restart kubeletnnkubectl get node

升级过程很顺利

[root@ops-k8s-m1 k8s]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 26h v1.16.15nops-k8s-m2 Ready master 27h v1.16.15nops-k8s-m3 Ready master 27h v1.16.15

想偷懒尝试直接升级到1.19.16,结果还是报错,看来只能一个一个版本去升级

[root@ops-k8s-m1 k8s]# kubeadm upgrade plann[upgrade/config] Making sure the configuration is correct:n[upgrade/config] Reading configuration from the cluster...n[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'n[upgrade/config] FATAL: this version of kubeadm only supports deploying clusters with the control plane version >= 1.18.0. Current version: v1.16.15nTo see the stack trace of this error execute with --v=5 or higher

升级kubernetes v1.16.15 到 kubernetes v1.17.17

升级命令

yum install -y kubeadm-v1.17.17 kubelet-v1.17.17 kubectl-v1.17.17 --disableexcludes=kubernetesnnkubeadm upgrade plannnkubeadm upgrade apply v1.17.17nnsystemctl daemon-reloadnnsystemctl restart kubeletnnkubectl get node

升级的时候遇到两个问题

问题一:

[root@ops-k8s-m1 k8s]# kubeadm upgrade plann[upgrade/config] Making sure the configuration is correct:n[upgrade/config] Reading configuration from the cluster...n[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'n[preflight] Running pre-flight checks.n[upgrade] Making sure the cluster is healthy:n[upgrade] Fetching available versions to upgrade ton[upgrade/versions] Cluster version: v1.16.15n[upgrade/versions] kubeadm version: v1.17.16nI1111 17:12:50.236748 27033 version.go:251] remote version is much newer: v1.25.4; falling back to: stable-1.17n[upgrade/versions] Latest stable version: v1.17.17n[upgrade/versions] FATAL: etcd cluster contains endpoints with mismatched versions: map[https://192.168.23.241:2379:3.3.15 https://192.168.23.242:2379:3.3.10 https://192.168.23.243:2379:3.3.10]

大概是说v1.17.17升级的节点的etcd版本会变成3.3.15,而原来的etcd版本是3.3.10,这个直接强制升级就好了

解决: 升级命令改为:kubeadm upgrade apply v1.17.17 -f

问题二: 升级完启动kubelet遇到问题节点一直是NOTReady状态,查看kubelet日志如下:

[root@ops-k8s-m1 k8s]# systemctl status kubeletn● kubelet.service - kubelet: The Kubernetes Node Agentn Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)n Drop-In: /usr/lib/systemd/system/kubelet.service.dn └─10-kubeadm.confn Active: active (running) since Mon 2022-11-14 10:26:16 CST; 34s agon Docs: https://kubernetes.io/docs/n Main PID: 24321 (kubelet)n Tasks: 15n Memory: 30.8Mn CGroup: /system.slice/kubelet.servicen └─24321 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --cgroup-driver=cgroupfs --network-plugin=cni --pod-infra-container-image=harbor.dragonpa...nnNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: "capabilities": {nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: "portMappings": truenNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: }nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: }nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: ]nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: }nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: : [failed to find plugin "flannel" in path [/opt/cni/bin]]nNov 14 10:26:46 ops-k8s-m1 kubelet[24321]: W1114 10:26:46.665687 24321 cni.go:237] Unable to update cni config: no valid networks found in /etc/cni/net.dnNov 14 10:26:47 ops-k8s-m1 kubelet[24321]: E1114 10:26:47.178298 24321 kubelet.go:2190] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitializednNov 14 10:26:49 ops-k8s-m1 kubelet[24321]: E1114 10:26:49.563003 24321 csi_plugin.go:287] Failed to initialize CSINode: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource

解决: 分析是网络插件加载失败,原因是升级完成后我的flannelv1.11.0版本太旧导致的不兼容,于是升级了下flannel到v1.15.0解决了这个问题(配置文件不变,改了镜像版本) 这边贴出flannel的配置文件

[root@ops-k8s-m1 network]# cat flannel.yaml n---napiVersion: policy/v1beta1nkind: PodSecurityPolicynmetadata:n name: psp.flannel.unprivilegedn annotations:n seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultn seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultn apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultn apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/defaultnspec:n privileged: falsen volumes:n - configMapn - secretn - emptyDirn - hostPathn allowedHostPaths:n - pathPrefix: "/etc/cni/net.d"n - pathPrefix: "/etc/kube-flannel"n - pathPrefix: "/run/flannel"n readOnlyRootFilesystem: falsen runAsUser:n rule: RunAsAnyn supplementalGroups:n rule: RunAsAnyn fsGroup:n rule: RunAsAnyn allowPrivilegeEscalation: falsen defaultAllowPrivilegeEscalation: falsen allowedCapabilities: ['NET_ADMIN']n defaultAddCapabilities: []n requiredDropCapabilities: []n hostPID: falsen hostIPC: falsen hostNetwork: truen hostPorts:n - min: 0n max: 65535n seLinux:n rule: 'RunAsAny'n---nkind: ClusterRolenapiVersion: rbac.authorization.k8s.io/v1beta1nmetadata:n name: flannelnrules:n - apiGroups: ['extensions']n resources: ['podsecuritypolicies']n verbs: ['use']n resourceNames: ['psp.flannel.unprivileged']n - apiGroups:n - ""n resources:n - podsn verbs:n - getn - apiGroups:n - ""n resources:n - nodesn verbs:n - listn - watchn - apiGroups:n - ""n resources:n - nodes/statusn verbs:n - patchn---nkind: ClusterRoleBindingnapiVersion: rbac.authorization.k8s.io/v1beta1nmetadata:n name: flannelnroleRef:n apiGroup: rbac.authorization.k8s.ion kind: ClusterRolen name: flannelnsubjects:n- kind: ServiceAccountn name: flanneln namespace: kube-systemn---napiVersion: v1nkind: ServiceAccountnmetadata:n name: flanneln namespace: kube-systemn---nkind: ConfigMapnapiVersion: v1nmetadata:n name: kube-flannel-cfgn namespace: kube-systemn labels:n tier: noden app: flannelndata:n cni-conf.json: |n {n "name": "cbr0",n "cniVersion": "0.3.1",n "plugins": [n {n "type": "flannel",n "delegate": {n "hairpinMode": true,n "isDefaultGateway": truen }n },n {n "type": "portmap",n "capabilities": {n "portMappings": truen }n }n ]n }n net-conf.json: |n {n "Network": "172.23.0.0/16",n "Backend": {n "Type": "vxlan"n }n }n---napiVersion: apps/v1nkind: DaemonSetnmetadata:n name: kube-flannel-ds-amd64n namespace: kube-systemn labels:n tier: noden app: flannelnspec:n selector:n matchLabels:n app: flanneln template:n metadata:n labels:n tier: noden app: flanneln spec:n affinity:n nodeAffinity:n requiredDuringSchedulingIgnoredDuringExecution:n nodeSelectorTerms:n - matchExpressions:n - key: beta.kubernetes.io/osn operator: Inn values:n - linuxn - key: beta.kubernetes.io/archn operator: Inn values:n - amd64n hostNetwork: truen tolerations:n - operator: Existsn effect: NoSchedulen serviceAccountName: flanneln initContainers:n - name: install-cnin image: quay.io/coreos/flannel:v0.15.0-amd64n command:n - cpn args:n - -fn - /etc/kube-flannel/cni-conf.jsonn - /etc/cni/net.d/10-flannel.conflistn volumeMounts:n - name: cnin mountPath: /etc/cni/net.dn - name: flannel-cfgn mountPath: /etc/kube-flannel/n containers:n - name: kube-flanneln image: quay.io/coreos/flannel:v0.15.0-amd64n command:n - /opt/bin/flanneldn args:n - --ip-masqn - --kube-subnet-mgrn resources:n requests:n cpu: "100m"n memory: "50Mi"n limits:n cpu: "100m"n memory: "50Mi"n securityContext:n privileged: falsen capabilities:n add: ["NET_ADMIN"]n env:n - name: POD_NAMEn valueFrom:n fieldRef:n fieldPath: metadata.namen - name: POD_NAMESPACEn valueFrom:n fieldRef:n fieldPath: metadata.namespacen volumeMounts:n - name: runn mountPath: /run/flanneln - name: flannel-cfgn mountPath: /etc/kube-flannel/n volumes:n - name: runn hostPath:n path: /run/flanneln - name: cnin hostPath:n path: /etc/cni/net.dn - name: flannel-cfgn configMap:n name: kube-flannel-cfgn---napiVersion: apps/v1nkind: DaemonSetnmetadata:n name: kube-flannel-ds-arm64n namespace: kube-systemn labels:n tier: noden app: flannelnspec:n selector:n matchLabels:n app: flanneln template:n metadata:n labels:n tier: noden app: flanneln spec:n affinity:n nodeAffinity:n requiredDuringSchedulingIgnoredDuringExecution:n nodeSelectorTerms:n - matchExpressions:n - key: beta.kubernetes.io/osn operator: Inn values:n - linuxn - key: beta.kubernetes.io/archn operator: Inn values:n - arm64n hostNetwork: truen tolerations:n - operator: Existsn effect: NoSchedulen serviceAccountName: flanneln initContainers:n - name: install-cnin image: quay.io/coreos/flannel:v0.11.0-arm64n command:n - cpn args:n - -fn - /etc/kube-flannel/cni-conf.jsonn - /etc/cni/net.d/10-flannel.conflistn volumeMounts:n - name: cnin mountPath: /etc/cni/net.dn - name: flannel-cfgn mountPath: /etc/kube-flannel/n containers:n - name: kube-flanneln image: quay.io/coreos/flannel:v0.11.0-arm64n command:n - /opt/bin/flanneldn args:n - --ip-masqn - --kube-subnet-mgrn resources:n requests:n cpu: "100m"n memory: "50Mi"n limits:n cpu: "100m"n memory: "50Mi"n securityContext:n privileged: falsen capabilities:n add: ["NET_ADMIN"]n env:n - name: POD_NAMEn valueFrom:n fieldRef:n fieldPath: metadata.namen - name: POD_NAMESPACEn valueFrom:n fieldRef:n fieldPath: metadata.namespacen volumeMounts:n - name: runn mountPath: /run/flanneln - name: flannel-cfgn mountPath: /etc/kube-flannel/n volumes:n - name: runn hostPath:n path: /run/flanneln - name: cnin hostPath:n path: /etc/cni/net.dn - name: flannel-cfgn configMap:n name: kube-flannel-cfgn---napiVersion: apps/v1nkind: DaemonSetnmetadata:n name: kube-flannel-ds-armn namespace: kube-systemn labels:n tier: noden app: flannelnspec:n selector:n matchLabels:n app: flanneln template:n metadata:n labels:n tier: noden app: flanneln spec:n affinity:n nodeAffinity:n requiredDuringSchedulingIgnoredDuringExecution:n nodeSelectorTerms:n - matchExpressions:n - key: beta.kubernetes.io/osn operator: Inn values:n - linuxn - key: beta.kubernetes.io/archn operator: Inn values:n - armn hostNetwork: truen tolerations:n - operator: Existsn effect: NoSchedulen serviceAccountName: flanneln initContainers:n - name: install-cnin image: quay.io/coreos/flannel:v0.11.0-armn command:n - cpn args:n - -fn - /etc/kube-flannel/cni-conf.jsonn - /etc/cni/net.d/10-flannel.conflistn volumeMounts:n - name: cnin mountPath: /etc/cni/net.dn - name: flannel-cfgn mountPath: /etc/kube-flannel/n containers:n - name: kube-flanneln image: quay.io/coreos/flannel:v0.11.0-armn command:n - /opt/bin/flanneldn args:n - --ip-masqn - --kube-subnet-mgrn resources:n requests:n cpu: "100m"n memory: "50Mi"n limits:n cpu: "100m"n memory: "50Mi"n securityContext:n privileged: falsen capabilities:n add: ["NET_ADMIN"]n env:n - name: POD_NAMEn valueFrom:n fieldRef:n fieldPath: metadata.namen - name: POD_NAMESPACEn valueFrom:n fieldRef:n fieldPath: metadata.namespacen volumeMounts:n - name: runn mountPath: /run/flanneln - name: flannel-cfgn mountPath: /etc/kube-flannel/n volumes:n - name: runn hostPath:n path: /run/flanneln - name: cnin hostPath:n path: /etc/cni/net.dn - name: flannel-cfgn configMap:n name: kube-flannel-cfgn---napiVersion: apps/v1nkind: DaemonSetnmetadata:n name: kube-flannel-ds-ppc64len namespace: kube-systemn labels:n tier: noden app: flannelnspec:n selector:n matchLabels:n app: flanneln template:n metadata:n labels:n tier: noden app: flanneln spec:n affinity:n nodeAffinity:n requiredDuringSchedulingIgnoredDuringExecution:n nodeSelectorTerms:n - matchExpressions:n - key: beta.kubernetes.io/osn operator: Inn values:n - linuxn - key: beta.kubernetes.io/archn operator: Inn values:n - ppc64len hostNetwork: truen tolerations:n - operator: Existsn effect: NoSchedulen serviceAccountName: flanneln initContainers:n - name: install-cnin image: quay.io/coreos/flannel:v0.11.0-ppc64len command:n - cpn args:n - -fn - /etc/kube-flannel/cni-conf.jsonn - /etc/cni/net.d/10-flannel.conflistn volumeMounts:n - name: cnin mountPath: /etc/cni/net.dn - name: flannel-cfgn mountPath: /etc/kube-flannel/n containers:n - name: kube-flanneln image: quay.io/coreos/flannel:v0.11.0-ppc64len command:n - /opt/bin/flanneldn args:n - --ip-masqn - --kube-subnet-mgrn resources:n requests:n cpu: "100m"n memory: "50Mi"n limits:n cpu: "100m"n memory: "50Mi"n securityContext:n privileged: falsen capabilities:n add: ["NET_ADMIN"]n env:n - name: POD_NAMEn valueFrom:n fieldRef:n fieldPath: metadata.namen - name: POD_NAMESPACEn valueFrom:n fieldRef:n fieldPath: metadata.namespacen volumeMounts:n - name: runn mountPath: /run/flanneln - name: flannel-cfgn mountPath: /etc/kube-flannel/n volumes:n - name: runn hostPath:n path: /run/flanneln - name: cnin hostPath:n path: /etc/cni/net.dn - name: flannel-cfgn configMap:n name: kube-flannel-cfgn---napiVersion: apps/v1nkind: DaemonSetnmetadata:n name: kube-flannel-ds-s390xn namespace: kube-systemn labels:n tier: noden app: flannelnspec:n selector:n matchLabels:n app: flanneln template:n metadata:n labels:n tier: noden app: flanneln spec:n affinity:n nodeAffinity:n requiredDuringSchedulingIgnoredDuringExecution:n nodeSelectorTerms:n - matchExpressions:n - key: beta.kubernetes.io/osn operator: Inn values:n - linuxn - key: beta.kubernetes.io/archn operator: Inn values:n - s390xn hostNetwork: truen tolerations:n - operator: Existsn effect: NoSchedulen serviceAccountName: flanneln initContainers:n - name: install-cnin image: quay.io/coreos/flannel:v0.11.0-s390xn command:n - cpn args:n - -fn - /etc/kube-flannel/cni-conf.jsonn - /etc/cni/net.d/10-flannel.conflistn volumeMounts:n - name: cnin mountPath: /etc/cni/net.dn - name: flannel-cfgn mountPath: /etc/kube-flannel/n containers:n - name: kube-flanneln image: quay.io/coreos/flannel:v0.11.0-s390xn command:n - /opt/bin/flanneldn args:n - --ip-masqn - --kube-subnet-mgrn resources:n requests:n cpu: "100m"n memory: "50Mi"n limits:n cpu: "100m"n memory: "50Mi"n securityContext:n privileged: falsen capabilities:n add: ["NET_ADMIN"]n env:n - name: POD_NAMEn valueFrom:n fieldRef:n fieldPath: metadata.namen - name: POD_NAMESPACEn valueFrom:n fieldRef:n fieldPath: metadata.namespacen volumeMounts:n - name: runn mountPath: /run/flanneln - name: flannel-cfgn mountPath: /etc/kube-flannel/n volumes:n - name: runn hostPath:n path: /run/flanneln - name: cnin hostPath:n path: /etc/cni/net.dn - name: flannel-cfgn configMap:n name: kube-flannel-cfg

解决这两个问题也顺利完成升级

[root@ops-k8s-m2 ~]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 4d2h v1.17.17nops-k8s-m2 Ready master 4d2h v1.17.17nops-k8s-m3 Ready master 4d2h v1.17.17

升级kubernetes v1.17.17 到 kubernetes v1.18.20

升级命令

yum install -y kubeadm-v1.18.20 kubelet-v1.18.20 kubectl-v1.18.20 --disableexcludes=kubernetesnnkubeadm upgrade plannnkubeadm upgrade apply v1.18.20nnsystemctl daemon-reloadnnsystemctl restart kubeletnnkubectl get node

这个也顺利升级

[root@ops-k8s-m3 ~]# kubectl get nodenNAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 4d2h v1.18.20nops-k8s-m2 Ready master 4d2h v1.18.20nops-k8s-m3 Ready master 4d2h v1.18.20

升级kubernetes v1.18.20 到 kubernetes v1.19.16

升级命令

yum install -y kubeadm-v1.19.16 kubelet-v1.19.16 kubectl-v1.19.16 --disableexcludes=kubernetesnnkubeadm upgrade plannnkubeadm upgrade apply v1.19.16nnsystemctl daemon-reloadnnsystemctl restart kubeletnnkubectl get node

升级也很顺利

NAME STATUS ROLES AGE VERSIONnops-k8s-m1 Ready master 4d3h v1.19.16nops-k8s-m2 Ready master 4d3h v1.19.16nops-k8s-m3 Ready master 4d3h v1.19.16

最终的版本也升级成功,顺利完成了从v1.15.3到v1.19.16的全过程

测试

运行一个应用测试一下,由于我的是三个master节点,先把污点去掉,当node来用(生产环境不建议这样干)

kubectl taint node ops-k8s-m1 node-role.kubernetes.io/master-nkubectl taint node ops-k8s-m2 node-role.kubernetes.io/master-nkubectl taint node ops-k8s-m3 node-role.kubernetes.io/master-

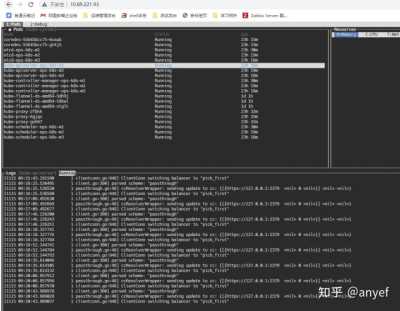

创建一个kubebox应用

[root@ops-k8s-m1 kubebox]# kubectl apply -f kubebox-deploy.yml nservice/kubebox createdn[root@ops-k8s-m1 kubebox]# kubectl get pod -n kube-ops nNAME READY STATUS RESTARTS AGEnkubebox-676ff9d4f4-bdszh 1/1 Running 0 113s

访问也是没有问题

查看kube-system下面各组件的日志是否有异常,还有节点的massages日志是否有异常。 除此之外,想升级到v1.19.16更高版本的kubernetes版本也可以用此方法。

参考原文:https://www.lishiwei.com.cn

可参考官方文档:https://kubernetes.io/zh-cn/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/